Bias is one of the largest ethical issue surrounding AI and machine learning today. Machine translation is not exempt from these issues as we have discussed in our previous post.

MTPE is one of the most requested service at the moment and also quite controversial. Many linguists claim the results are still cumbersome and editing machine translation can be more time consuming than translation from scratch.

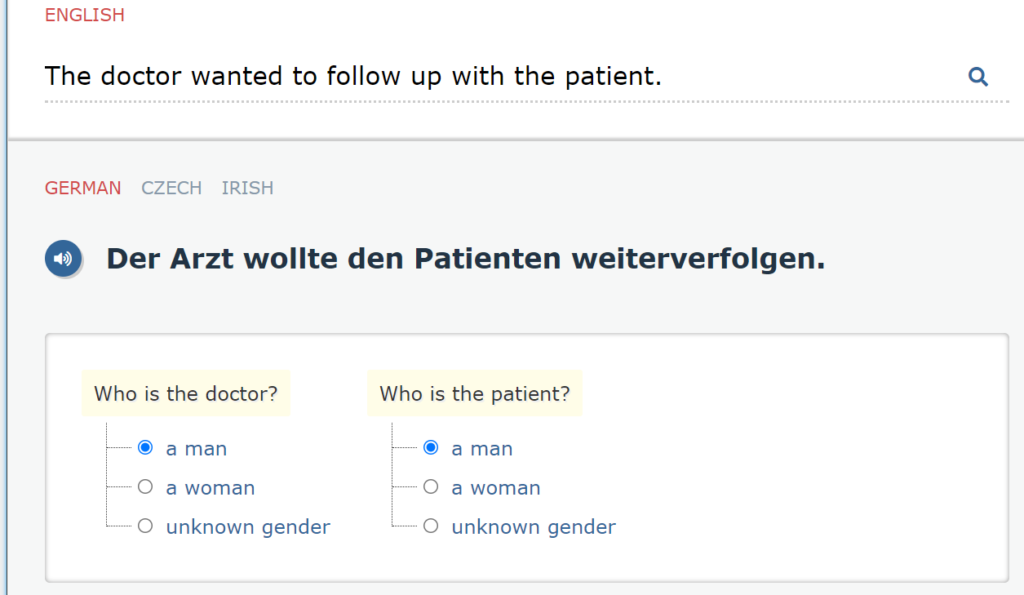

Machine translation models are trained on huge corpora of text, with pairs of sentences, one a translation of another into a different language. However, there are nuances in language that often make it difficult to provide an accurate and direct translation from one language to another. Therefore, translations produced by machines are often biased because of ambiguities in gender, number and forms of address.

Can we overcome these challenges?

Fairslator is an experimental application which removes many such biases. Fairslator works by examining the output of machine translation, detecting when bias has occurred, and correcting it by asking follow-up questions such as Do you mean male student or female student? Are you addressing the person casually or politely? Fairslator is a human-in-the-loop translator, built on the idea that you shouldn’t guess if you can ask.

Wanna know more? And who better than the brain behind the brand? We chatted with Michal Měchura., an enthusiastic language technologist who launched this application to overcome bias. Currently supporting Irish, Czech and German. The application “flags” potential bias and allows the user to make an informed choice.

Keen on learning more?

Downoload Fairslator whitepaper here!